Four Myths of GenAI in the Enterprise, And What MIT’s Latest Study Reveals

Introduction

MIT NANDA’s latest report revealed how in 2025 enterprise GenAI is still mostly hype: disruption is limited, big firms lag in scale-up, budgets flow to the wrong places, and workflow integration, not model quality, is the real barrier.

.png)

From Hype to Reality: What MIT’s Data Really Shows

For too long, business leaders have been seduced by four persistent myths: that GenAI is universally transformative, that enterprises lead by building in-house, that capital naturally follows ROI, and that model quality is the bottleneck.

Recently, the new GenAI Divide: State of AI in Business 2025 report from MIT actually destroyed these convictions with hard data. It shows that most firms remain stuck in pilots, while only a handful succeed by rejecting these myths and instead demanding adaptive, workflow-embedded systems. Let’s debunk them one by one.

1. Is GenAI already transforming every industry?

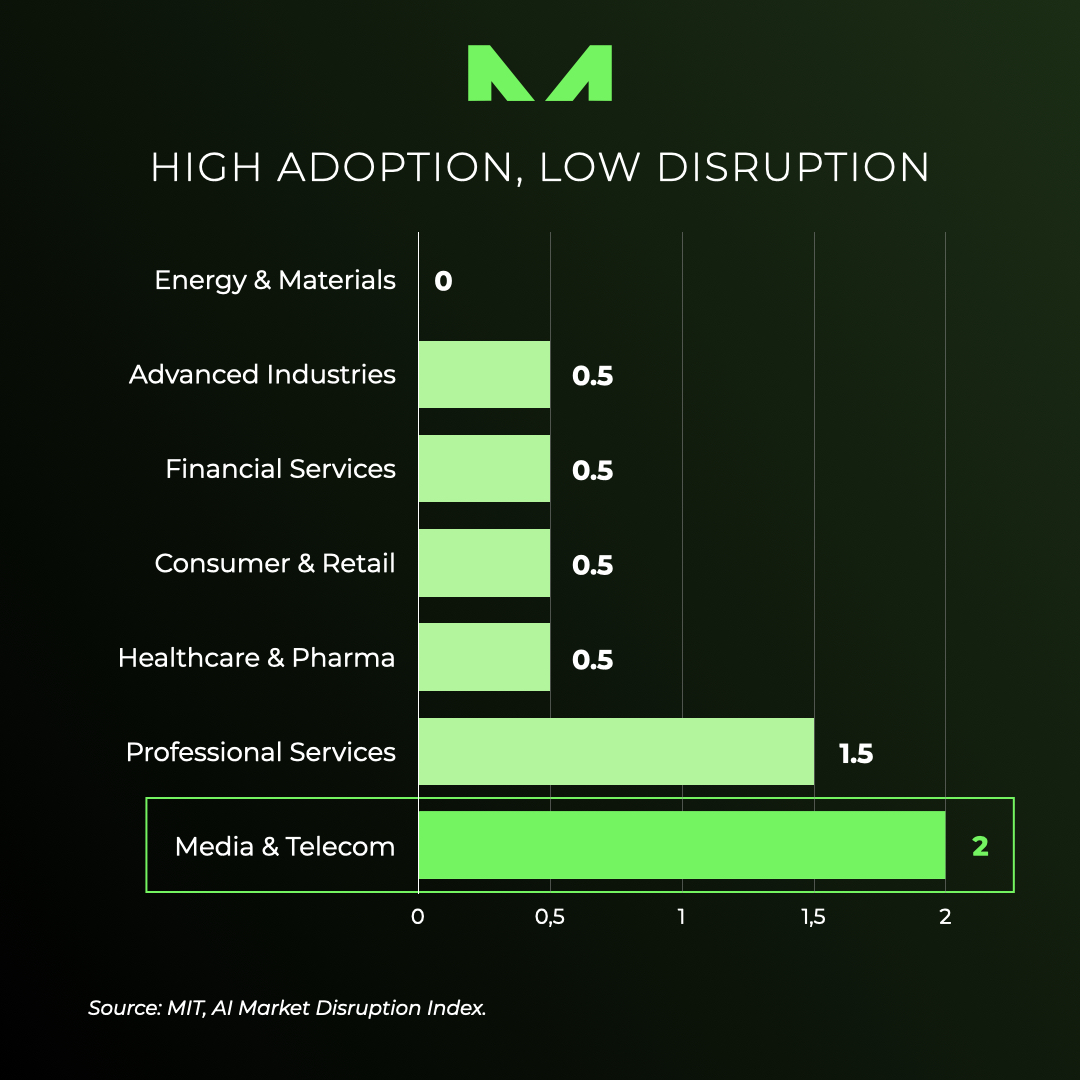

MIT’s data says no. Across nine major sectors, only Technology and Media & Telecom show clear structural disruption; seven sectors display high pilot activity but little lasting change. The report’s composite disruption index (market-share shifts, AI-native revenue growth, new AI business models, behavior change, executive org moves) keeps Tech and Media at the top even after sensitivity tests, while Healthcare and Energy remain consistently low. Leaders interviewed described faster contract processing or call routing—but not business-model rewrites. Bottom line: adoption is high, transformation is rare. Focus your 2025–26 roadmap on processes you can actually move, not on sweeping industry claims.

- Myth #1: GenAI is transforming every industry.

- Reality Check #1: Disruption is concentrated in Tech and Media.

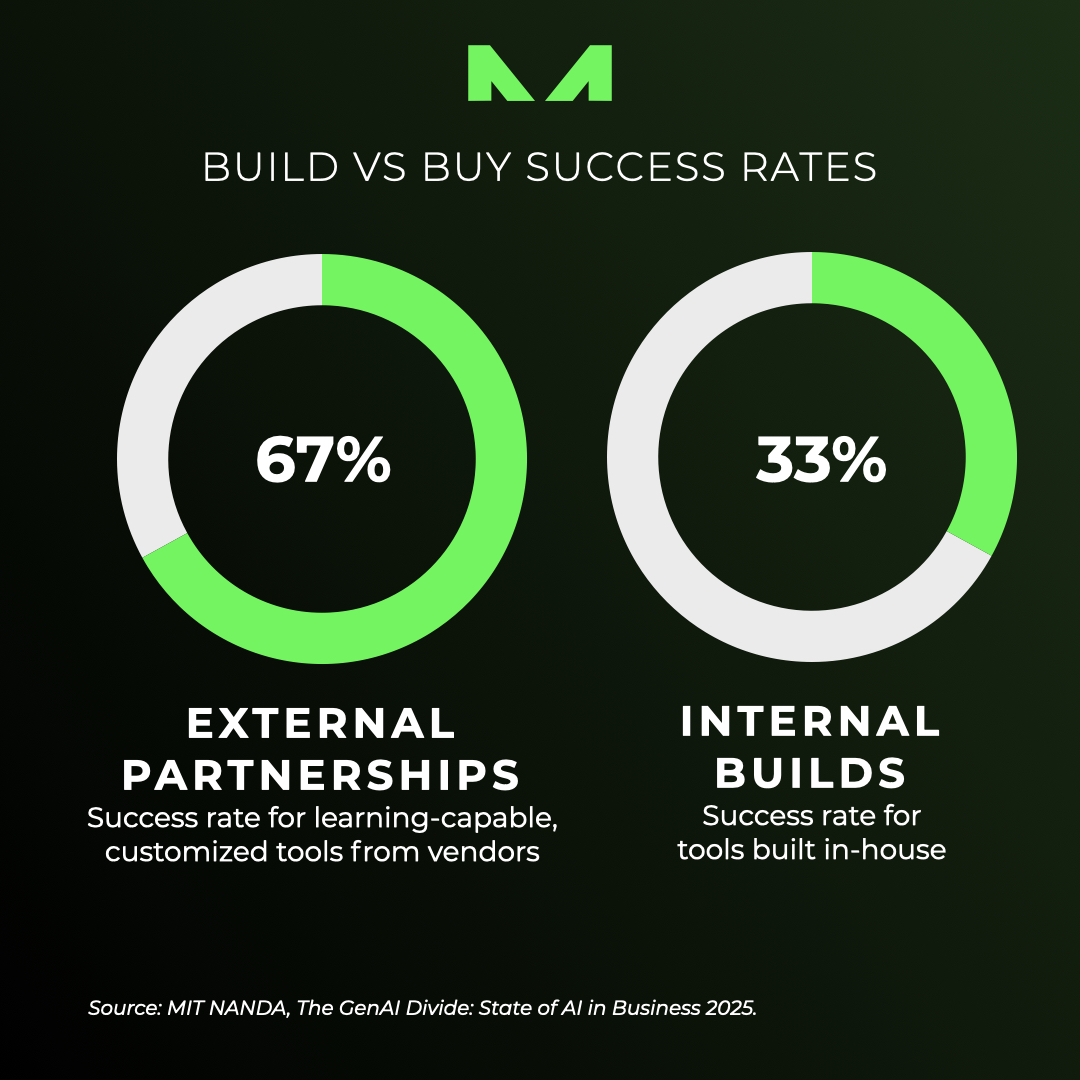

2. Are large enterprises truly leading by building GenAI in-house?

Not in outcomes. Enterprises run the most pilots, but only ~5% of custom or embedded tools reach production with measurable P&L impact. Mid-market companies scale faster—~90 days from pilot to full implementation versus ~9 months for enterprises—because they select narrower workflows and co-develop with vendors. Across interviews, external partnerships were about 2× more likely to reach deployment than internal builds, and employee usage was nearly double for externally built tools. Treat AI like BPO—buy, customize, and hold partners accountable for weekly learning—rather than a big-bang platform you staff forever.

- Myth #2: Large enterprises are leading the way and building in-house.

- Reality Check #2: Big firms lead in pilot volume but lag in scale-up, and in-house projects show higher failures rates.

3. Do GenAI investments naturally flow to where ROI is highest?

SImple answers: no. The study shows an investment bias. Executives allocate a hypothetical ~50% of GenAI budget to Sales & Marketing because metrics are visible (demos, leads, open rates). Yet the clearest payback shows up in back-office work: $2–10M annual savings from BPO elimination in service/document processing, ~30% reductions in agency spend, and $1M+ per year from automated risk checks. Leaders admit attribution bias, not ROI, often drives spend. The move is to re-balance toward operations, finance, and procurement where savings are contracted and measurable—and where learning systems compound value.

- Myth #3: GenAI investments follow ROI.

- Reality Check #3: ROI hides in the back-office, not the dashboard.

4. Is “model quality” the main thing holding GenAI back?

The report points to a different culprit: the learning gap. Pilots stall because tools don’t retain context, don’t adapt, and don’t improve inside real workflows. Users happily draft with ChatGPT, yet reject enterprise tools that forget and break at edges; for high-stakes work, humans are still preferred by 9:1 margins. Early agentic systems with persistent memory and orchestration directly address this gap. Make “memory, feedback loops, and weekly improvement cadence” contract terms—not roadmap promises.

How Can Enterprises Turn MIT’s Findings Into an Action Roadmap?

The GenAI Divide study makes the challenge clear, but at MINT we see it as more than a diagnosis: it’s a blueprint. Our recommendation: act on four key lessons to move from hype to measurable impact.

1. How to reassess GenAI investments?

Shift budgets away from vanity front-office experiments and toward operational automations with provable ROI. Invest in strong data governance so every dollar compounds value instead of chasing hype.

Rebalance spend to operational ROI, not demo visibility.

2. Where should enterprises start with use cases?

Embed GenAI into specific workflows chosen by line managers, not just central innovation labs. Focus on proven operations — like advertising and media management — then scale once results prove durable.

The right use cases deliver faster, contextual wins.

3. Why rely on external vendors over internal builds?

Externally sourced solutions succeed about twice as often as homegrown tools. Partner with trusted vendors, demand customization, and contract for weekly improvement cycles that ensure the system learns inside your workflows.

Buy-and-customize beats build-from-scratch.

4. What should leaders do about “shadow AI”?

Workers already use ChatGPT and other consumer tools daily, often without IT oversight. Treat this as a signal, not a threat: set clear policies, select approved platforms, and integrate them with data governance frameworks.

Govern shadow AI before it governs you.